We just concluded an outstandingly run LAK15 conference hosted expertly by Marist College, Poughkeepsie NY.

In the closing panel, a few of us shared thoughts on the state of the field. We were invited to comment on what struck us at LAK. Firstly, the record-breaking 300 (?) delegate numbers, especially ~100 newcomers (right?), was very heartening. Also, the serious level of sponsorship from companies reflects the rise of the field and the growth of the marketplace. In a far-from-systematic analysis of LAK trends, like Prog Chair Agathe Merceron in her opening welcome, plus several others, I’ll pick out Writing Analytics as theme of the year.

Writing is the primary window onto learners’ minds for educational assessment in most disciplines. Just as students learn to “show their working” in maths to demonstrate how they reach a solution, in the medium of language, they must make their thinking visible, leading the reader through the chain of reasoning through the appropriate use of linguistic constructions that are the hallmarks of educated, scholarly writing. In that sense, it might be surprising that Writing Analytics is only this year highly visible, and I’m not sure what the explanation is. Perhaps it is simply a question of it taking a few years for a new field to connect one-by-one with key people in the relevant tributaries that flow into it. At LAK11 there were no writing analytics papers, with just six from LAK12-14, including two DCLA workshop papers [1-6]. (Slight tangent: the LAK Data Challenge has up till now been a scientometrics challenge, and represents a different kind of writing analytics — for researchers.)

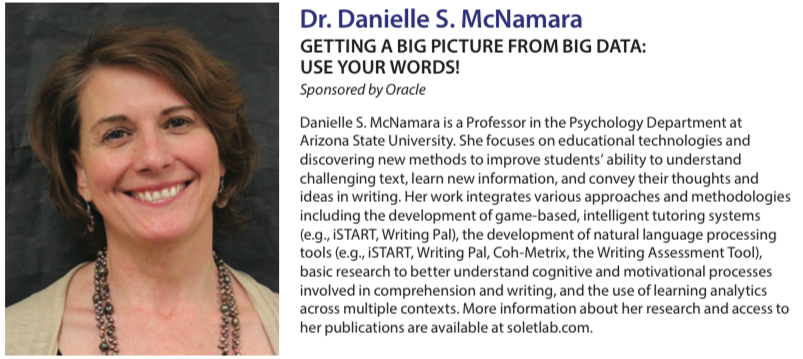

We convened the pre-conference workshops on Discourse-Centric Learning Analytics (DCLA13; DCLA14) in order to bring in key researchers in fields such as CSCL and AIED. In a similar vein, this year LAK invited Danielle McNamara, SoLET Lab Director at Arizona State University, as a keynote speaker.

This put writing analytics on the radar for many delegates, making them aware of different classes of writing support tool, and the capacity of text analysis to give insight into the cognition and emotions of student writers. The ASU team had a strong presence in LAK15 papers/demo [7-11], also strengthening the relevance of LAK to the K-12 community. In addition, there were papers spanning very different technologies, and genres of writing, from Queensland University of Technology (adults, reflective writing), and The Open University (adults, masters-level essays) [12-13].

In addition, the team at CAST gave a practitioner report on their Writing Key web app, a lower-tech approach which structures K6 children’s writing with strong templates and cues, rather than using NLP to extract structure from free text. Here’s the abstract since it’s not in the proceedings:

Interestingly, the fact that they ran out of time to embed visualisations in the tool meant they hand-generated prototype views in Tableau, in order to elicit reactions. A simple bubble chart showing the most frequent words used proved to be most useful.

Not surprisingly, automated essay grading evokes strong reactions from many people. In my own mind, a more appropriate strategy to pursue (at UTS:CIC) is to place a lesser burden on the technology, and also avoid any suggestion that skilled readers can be automated out of their jobs. Right now, students receive zero feedback on drafts — there simply isn’t the human capacity to do so except in exclusive educational settings with extremely low teacher-student ratios. Yet we know that timely, personalised, formative feedback on work-in-progress is about the most powerful way to build learner confidence and skill. So the use case that I (and UTS academics) find most compelling is that of students checking their draft texts. We’ve gone from spell-checkers, to grammar checkers, to readability checkers, to plagiarism checkers. Next step in evolution: academic writing checkers.

Writing Analytics @LAK Conference: bibliography

- McNely, B. J., P. Gestwicki, J. H. Hill, P. Parli-Horne and E. Johnson (2012). Learning analytics for collaborative writing: a prototype and case study. Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, British Columbia, Canada, ACM. http://dx.doi.org/10.1145/2330601.2330654

- Lárusson, J. A. and B. White (2012). Monitoring student progress through their written “point of originality”. Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, British Columbia, Canada, ACM. http://dx.doi.org/10.1145/2330601.2330653

- Simsek, D., S. Buckingham Shum, Á. Sándor, A. De Liddo and R. Ferguson (2013). XIP Dashboard: Visual Analytics from Automated Rhetorical Parsing of Scientific Metadiscourse. 1st International Workshop on Discourse-Centric Learning Analytics, at 3rd International Conference on Learning Analytics & Knowledge, Leuven, BE (Apr. 8-12, 2013). Open Access Eprint: http://oro.open.ac.uk/37391

- Southavilay, V., K. Yacef, P. Reimann and R. A. Calvo (2013). Analysis of collaborative writing processes using revision maps and probabilistic topic models. Proceedings of the Third International Conference on Learning Analytics and Knowledge, Leuven, Belgium, ACM. http://dx.doi.org/10.1145/2460296.2460307

- Whitelock, D., D. Field, J. T. E. Richardson, N. V. Labeke and S. Pulman (2014). Designing and Testing Visual Representations of Draft Essays for Higher Education Students. 2nd International Workshop on Discourse-Centric Learning Analytics, Fourth International Conference on Learning Analytics and Knowledge, Indianapolis, Indiana, USA. https://dcla14.files.wordpress.com/2014/03/dcla14_whitelock_etal.pdf

- Simsek, D., S. Buckingham Shum, A. D. Liddo, R. Ferguson and Á. Sándor (2014). Visual analytics of academic writing. Proceedings of the Fourth International Conference on Learning Analytics And Knowledge, Indianapolis, Indiana, USA, ACM. http://dx.doi.org/10.1145/2567574.2567577

- Snow, E. L., L. K. Allen, M. E. Jacovina, C. A. Perret and D. S. McNamara (2015). You’ve got style: detecting writing flexibility across time. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723592

- Dascalu, M., S. Trausan-Matu, P. Dessus and D. S. McNamara (2015). Discourse cohesion: a signature of collaboration. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723578

- Crossley, S., L. K. Allen, E. L. Snow and D. S. McNamara (2015). Pssst… textual features… there is more to automatic essay scoring than just you! Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723595

- Allen, L. K., E. L. Snow and D. S. McNamara (2015). Are you reading my mind?: modeling students’ reading comprehension skills with natural language processing techniques. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723617

- LAK15 Demo: ReaderBench: An Integrated Tool Supporting Both Individual And Collaborative Learning. Mihai Dascalu, Lucia Larise Stavarche, Stefan Trausan-Matu, Phillipe Dessus, Bianco Maryse and Danielle McNamara [pdf] (See also this book chapter)

- Simsek, D., Á. Sándor, S. Buckingham Shum, R. Ferguson, A. D. Liddo and D. Whitelock (2015). Correlations between automated rhetorical analysis and tutors’ grades on student essays. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723603. Open Access Eprint: http://oro.open.ac.uk/42042

- Gibson, A. and K. Kitto (2015). Analysing reflective text for learning analytics: an approach using anomaly recontextualisation. Proceedings of the Fifth International Conference on Learning Analytics And Knowledge, Poughkeepsie, New York, ACM. http://dx.doi.org/10.1145/2723576.2723635